About Me

I am a Data Engineer by core expertise, with hands on experience across the entire data lifecycle, from raw data ingestion and pipeline design to analytics, machine learning, and AI-powered applications. I specialize in building scalable, reliable data systems that enable downstream analytics, data science, and intelligent decision making.

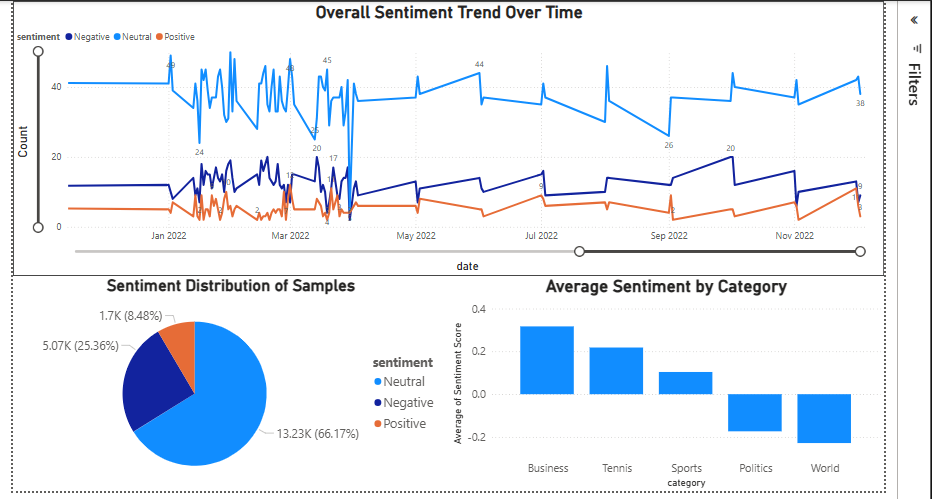

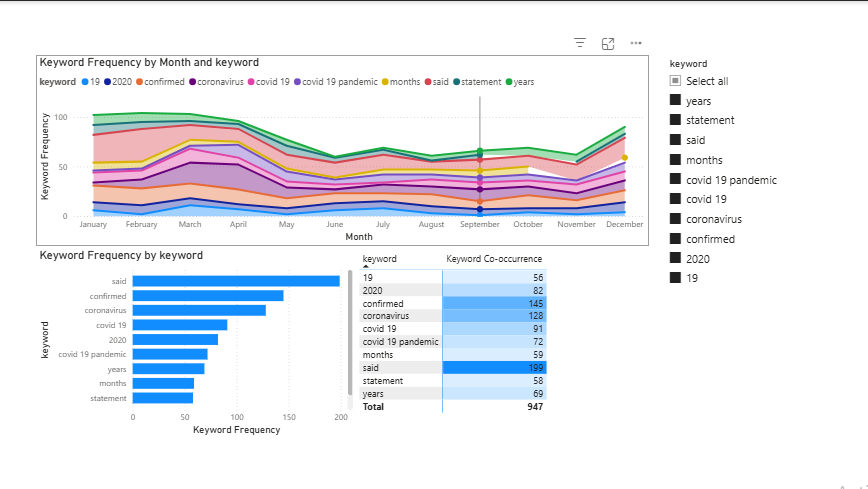

My background spans data engineering, data analysis, data science, and AI engineering, allowing me to work end-to-end: performing exploratory data analysis, designing ETL/ELT pipelines, modeling data for analytics, training and deploying machine learning models, and delivering insights through dashboards and APIs.

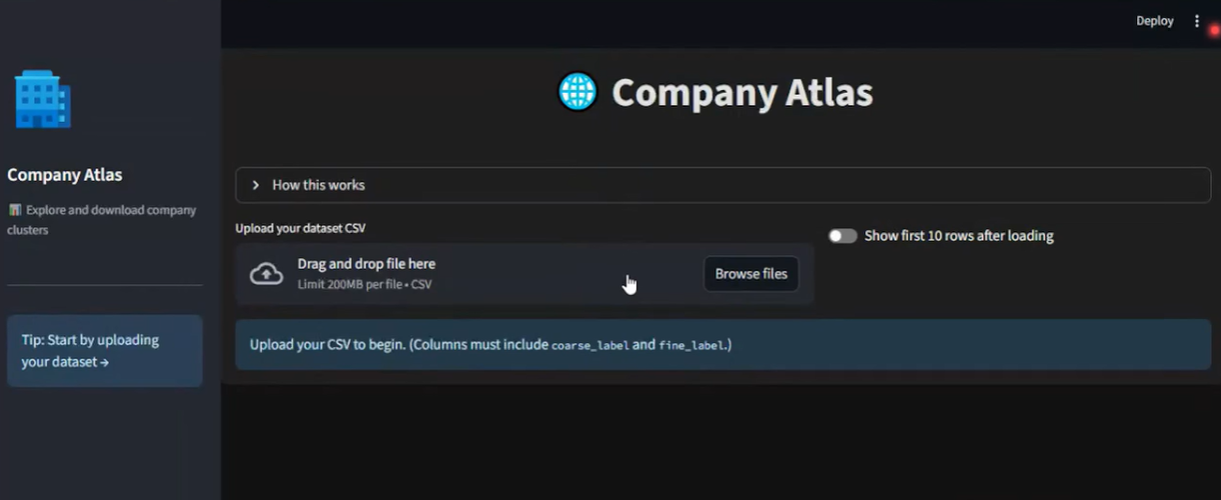

I have also worked on AI engineering and Generative AI systems, including LLM-based applications, LangChain pipelines, vector databases, and agentic AI workflows, integrating them with structured and unstructured data sources. This enables me to bridge traditional data platforms with modern AI driven solutions.

I focus on building solutions that are production ready, scalable, and aligned with real world business and research needs, with strong proficiency in Python, SQL, data pipelines, machine learning workflows, and data visualization. My strength lies in connecting data engineering foundations with advanced analytics and AI to deliver measurable impact.